Overview: Computer Vision in the Built Environment

This is a two-part series created by urban planning and urban technology students at University of Michigan for the Urban AI course. Part I is an overview of a topic of interest, and Part II is a replicable code tutorial.

(Part I) Overview: Computer Vision in the Built Environment

(Part II) Tutorial: AI-powered Traffic Analysis

Introduction

Modern cities and infrastructure are increasingly data-driven, yet the evaluation and monitoring of these built environments have traditionally been complex, labor-intensive, and time-consuming. Historically, engineers and planners manually inspected buildings, bridges, and urban spaces—a process that was not only subjective but also inefficient. As urbanization and infrastructure complexity grew, so did the need for automated and accurate evaluation methods.

In recent years, artificial intelligence (AI), and more specifically, Computer Vision, have revolutionized the way evaluations are conducted in these fields. With Computer Vision, planners can automate data collection and analysis, leading to more accurate and timely insights. By automating the analysis process, computer vision helps planners optimize designs, monitor structural conditions in real time, and support predictive maintenance of infrastructure assets. This integration of AI into the built environment transforms how we manage urban spaces, leading to more resilient, sustainable, and safe communities.

What is Computer Vision?

Definition

At its most basic level, computer vision involves converting the raw data from images (arrays of pixel values) into structured, actionable insights. Unlike human vision—which benefits from years of experience and context—computer vision systems rely on mathematical algorithms and machine learning models to identify patterns, objects, and spatial relationships in visual data, enabling automated decision-making and control across various industries—including architecture, construction, and infrastructure management[1].

How It Works?

Computer Vision involves in two essential technologies: deep learning and convolutional neural networks (CNNs)[2].

Machine learning uses algorithmic models that enable a computer to teach itself about the context of visual data. If enough data is fed through the model, the computer will “look” at the data and teach itself to tell one image from another. Algorithms enable the machine to learn by itself, rather than someone programming it to recognize an image[3].

The advent of deep learning, and specifically CNNs, has revolutionized computer vision. These networks automatically learn multi-level representations—from basic edges and textures in early layers to complex object parts in later layers—by optimizing millions of parameters through training on large datasets. This self-learning capability has dramatically improved accuracy and adaptability in tasks like image classification and object detection.

Much like a human making out an image at a distance, a CNN first discerns hard edges and simple shapes, then fills in information as it runs iterations of its predictions. A CNN is used to understand single images. A recurrent neural network (RNN) is used in a similar way for video applications to help computers understand how pictures in a series of frames are related to one another[3].

Work Process

Image Acquisition: The process begins with capturing images or video through sensors or cameras. This is akin to the retina capturing visual stimuli in the human eye.

Image Processing: Raw image data is often noisy and needs to be processed for better analysis. Techniques such as filtering, edge detection, and segmentation are employed to enhance and clarify the image content.

Feature Extraction: This involves identifying and describing important components (features) of the image, such as edges, textures, or key points. Traditional approaches used handcrafted algorithms like SIFT or HOG, but today, deep learning (especially Convolutional Neural Networks, or CNNs) automates this step, learning hierarchical features directly from data. This is an example tutorial of feature extraction. It includes 3 basic operations[4].

Source: Ryan Holbrook and Alexis Cook Object Detection/Tracking: This step scans images or videos and finds target objects, and then tracks movements of detected objects as they navigate in an environment. For example, object tracking can be used in autonomous driving to track pedestrians on sidewalks or as they cross the road[5].

Image Classification: This task assigns a single label or category to an entire image. For example, an aerial photo might be classified as “urban” or “rural.” CNNs excel at this by learning to recognize patterns from vast labeled datasets. We can use The Convolutional Classifier to achieve this step[4].

Source: Ryan Holbrook and Alexis Cook Post-Processing and Decision Making: Once features have been extracted and objects classified/detected, the results are refined, and then integrated into larger systems. The final step involves making informed decisions based on the extracted information. This could range from identifying objects in a scene to navigating an environment autonomously.

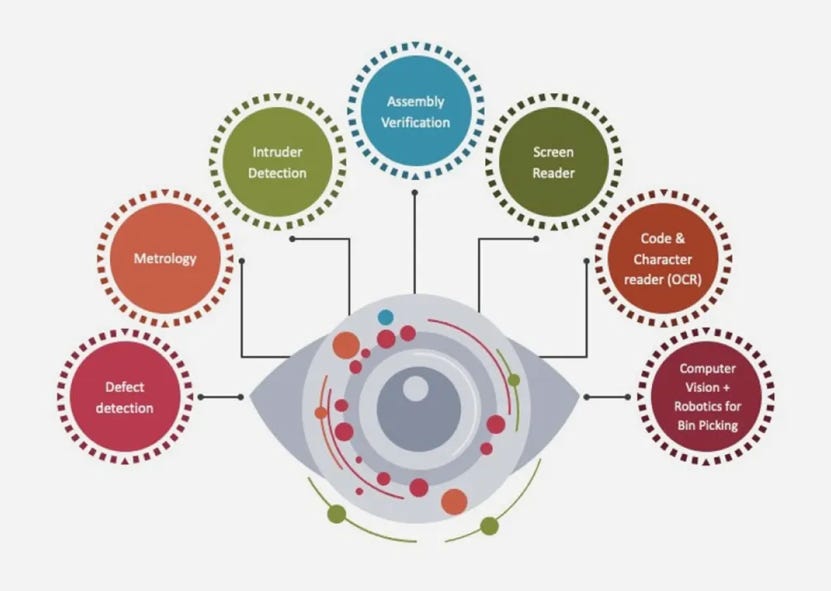

Applications in Computer Vision

Computer vision has already been applied in multiple fields such as autonomous driving, security surveillance, and retail analytics. In the field of urban planning, AI-driven computer vision tools are also beginning to play a role in planning practices. Computer vision can help analyze urban land, cityscapes, traffic patterns, and pedestrian behavior, reducing costs and providing deeper insights into urban dynamics to optimize urban design and enhance safety.

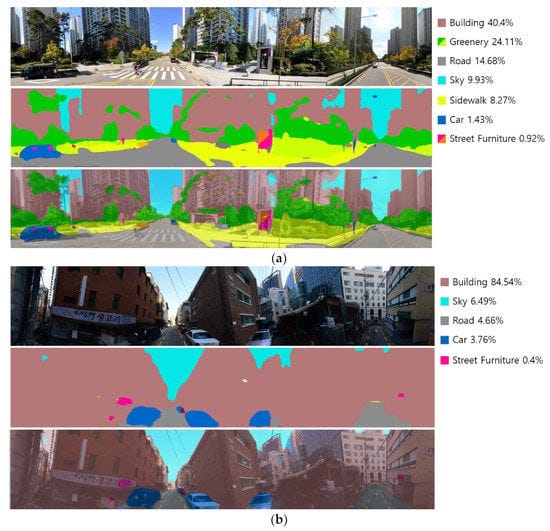

Street Walkability Analysis

Computer vision plays a crucial role in creating a more pedestrian-friendly street environment. By utilizing deep learning models such as Convolutional Neural Networks (CNNs), it can extract and analyze factors such as lighting, greenery, sidewalk conditions, and building facades to assess the overall quality of the street environment. AI models trained on large datasets can automatically classify street features and provide urban planners with valuable insights to optimize urban walkability.

Regarding pedestrian safety on streets, recent advancements in computer vision and AI have made real-time pedestrian safety monitoring possible. AI-powered crosswalk systems use cameras and deep learning models to detect pedestrian movement, predict potential hazards, and control traffic signals to improve safety. Trials in areas like New York and Singapore have shown that deploying AI systems has significantly reduced pedestrian accidents[7].

Intelligent Transportation and Infrastructure

Computer vision plays a crucial role in intelligent transportation and infrastructure management. By leveraging deep learning and convolutional neural networks (CNNs), it can automatically analyze traffic flow, monitor road conditions, and optimize infrastructure management[8]. These technologies enable urban planners to manage transportation systems more efficiently, reduce congestion, enhance safety, and improve infrastructure maintenance strategies.

Road Condition Detection and Maintenance

Computer vision can be used to detect damage on road surfaces, such as potholes, cracks, and wear. Compared to traditional manual inspections, AI-powered road monitoring systems can identify problems faster and more accurately through automation, while also predicting road aging trends[8].

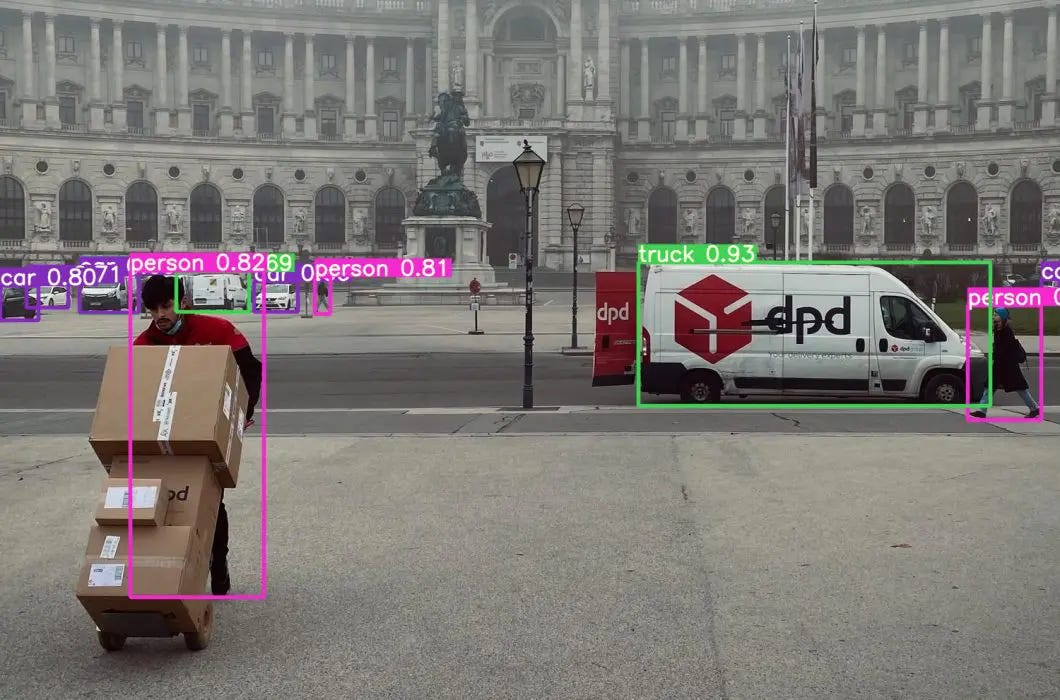

Traffic Flow and Pedestrian Behavior Analysis

Computer vision, combined with AI algorithms, can monitor and analyze traffic flow and pedestrian behavior in real-time, providing intelligent optimization solutions for urban traffic management. AI-powered cameras can automatically detect moving vehicles, pedestrians, and traffic signals, identifying anomalies such as congestion, speeding, and accidents[10]. These technologies help improve urban traffic management, enhance road safety, and optimize traffic signal systems.

Case Studies

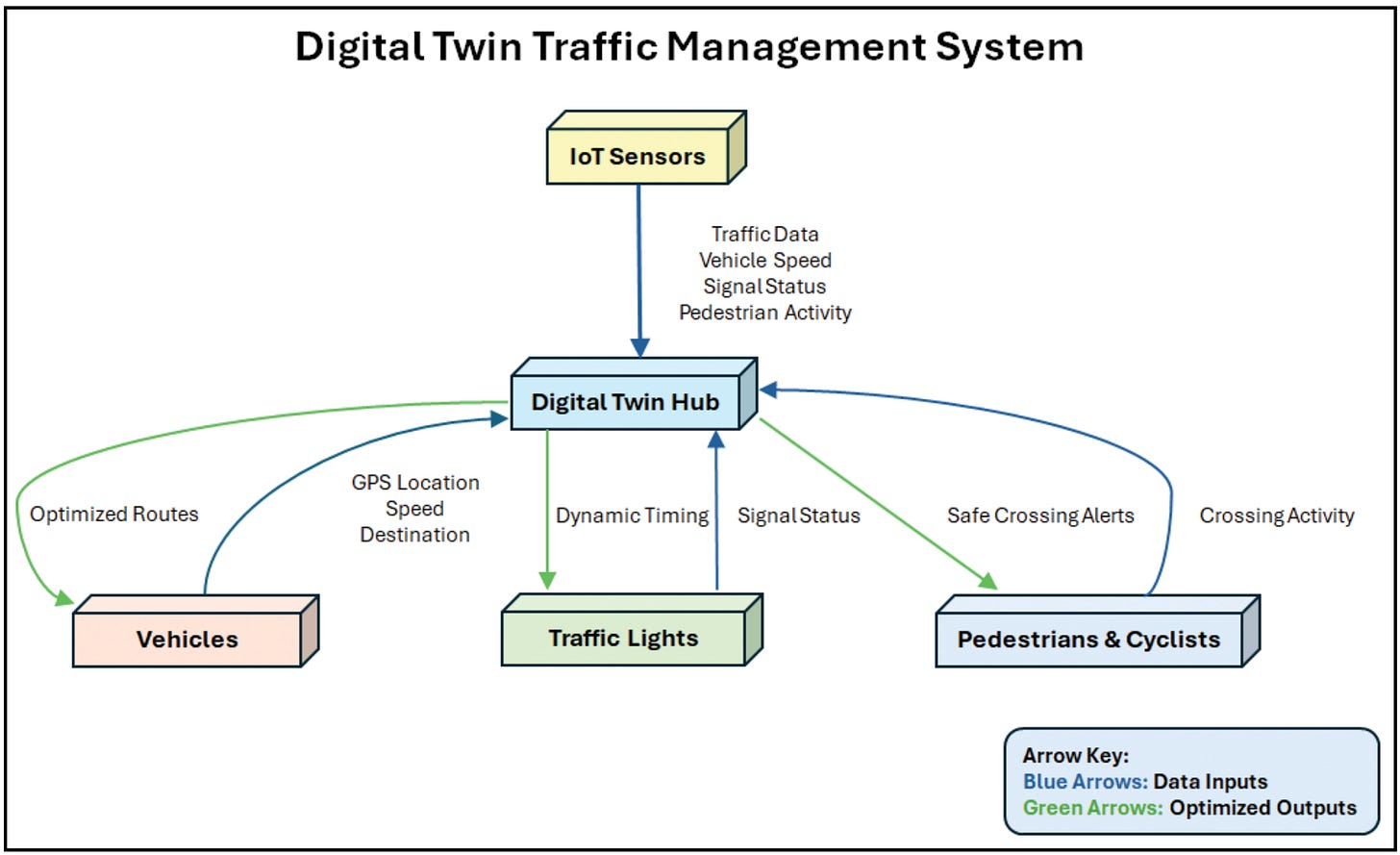

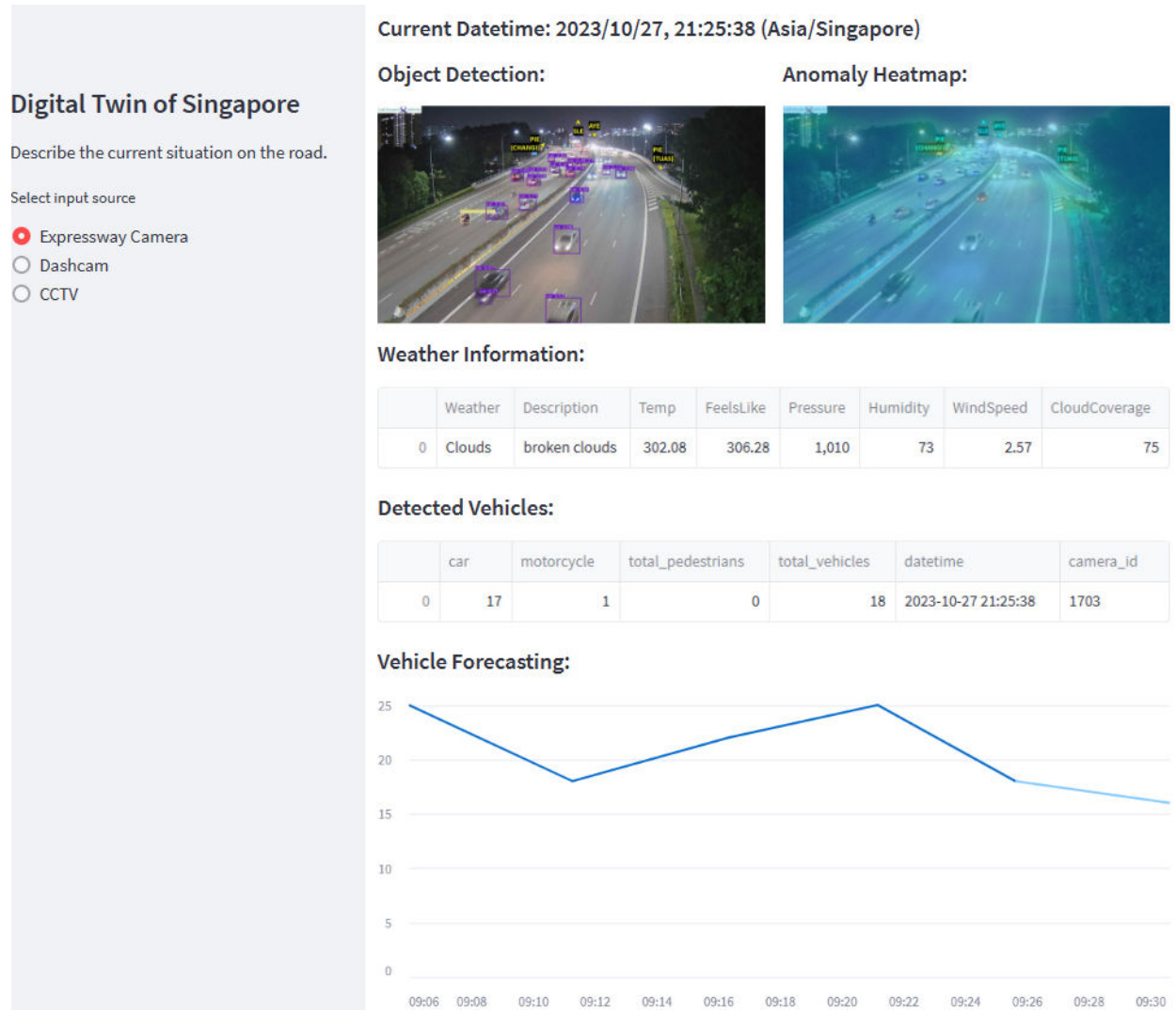

AI-Based Digital Twin Framework for Urban Traffic Management in Singapore

Singapore has been at the forefront of integrating AI and urban visual intelligence to manage urban traffic. The system integrates real-time data from APIs and dashcams, offering capabilities such as city information visualization and urban climate simulation. It uses various data sources, including Datamall, OpenWeather, mobile dashcams, and YouTube footage. The AI layer processes data into a user-friendly Digital Twin, with models trained for classification and prediction using either static expressway cameras or mobile dashcams[11].

The framework includes several visual intelligence modules for tasks like data anonymization, vehicle monitoring using YOLO-v7, localized weather detection with EfficientNet-b8, road surface monitoring using ResNet50, and abnormal incident detection[12]. It aims to improve traffic management, public safety, and commuting times.

AI-Based Smart Traffic Management in Barcelona

Barcelona has implemented an advanced intelligent traffic management system that uses real-time data to monitor traffic flow and dynamically adjust traffic lights[13]. This system helps optimize traffic movement and reduce congestion across the city.

Recently, as part of its 2025 Sustainable Mobility Plan, Barcelona announced a new initiative to introduce AI-driven smart traffic lights. These lights will use sensors and AI algorithms to analyze traffic density and automatically adjust signal timings, aiming to cut congestion by 20% and improve overall urban mobility[14].

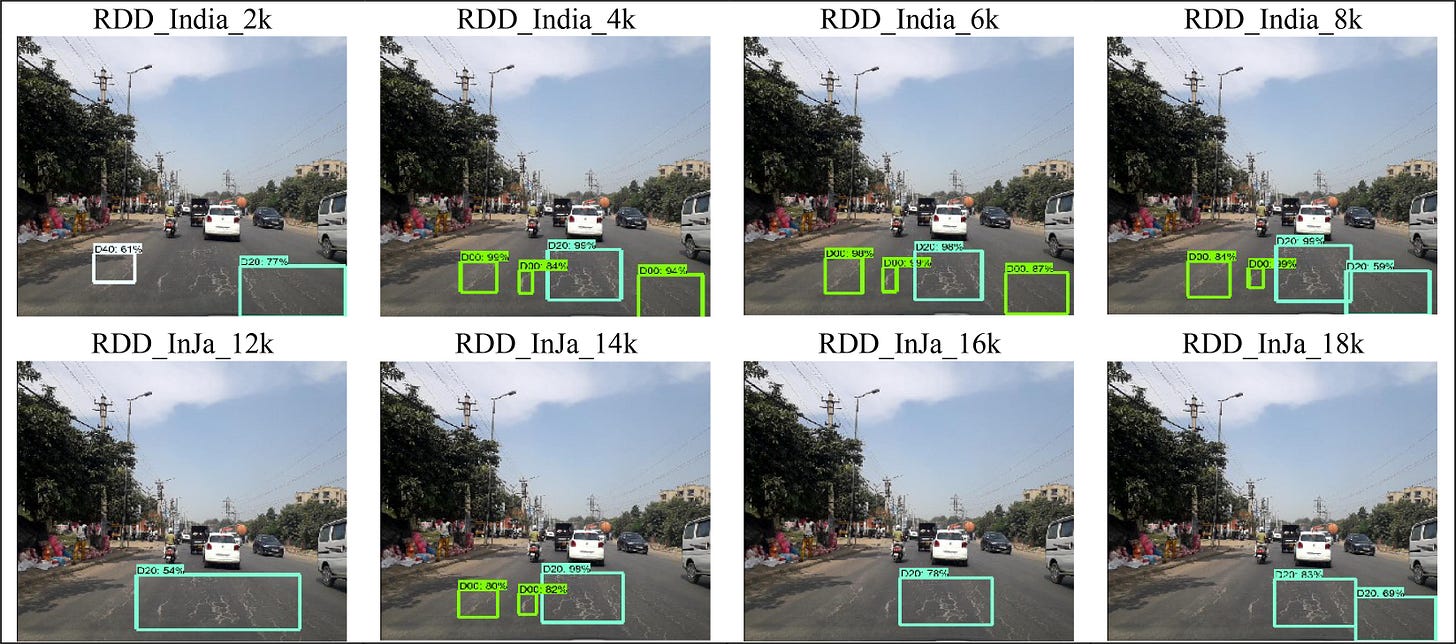

Smartphone-Based Road Monitoring in Japan

Japan has implemented smartphone-based road monitoring solutions that use AI models to analyze dashcam footage and mobile images, effectively detecting road defects like cracks and potholes[16]. These systems enable timely maintenance and improve road safety by using images captured by smartphones. It increases coverage by collecting data from daily commuters and speeds up response times by prioritizing repairs based on real-time citizen reports. For example, Lightweight Road Manager (LRM) developed by Maeda et al. is a smartphone application for the collection and real-time detection of roadway deficiencies for Japanese roads[16].

Building on Japan's methods, the dataset has been broadened by incorporating over 26,000 images from other countries such as India and Czech[17]. By applying a similar strategy worldwide, cities in developing countries can reduce costs compared to traditional road monitoring vehicles.

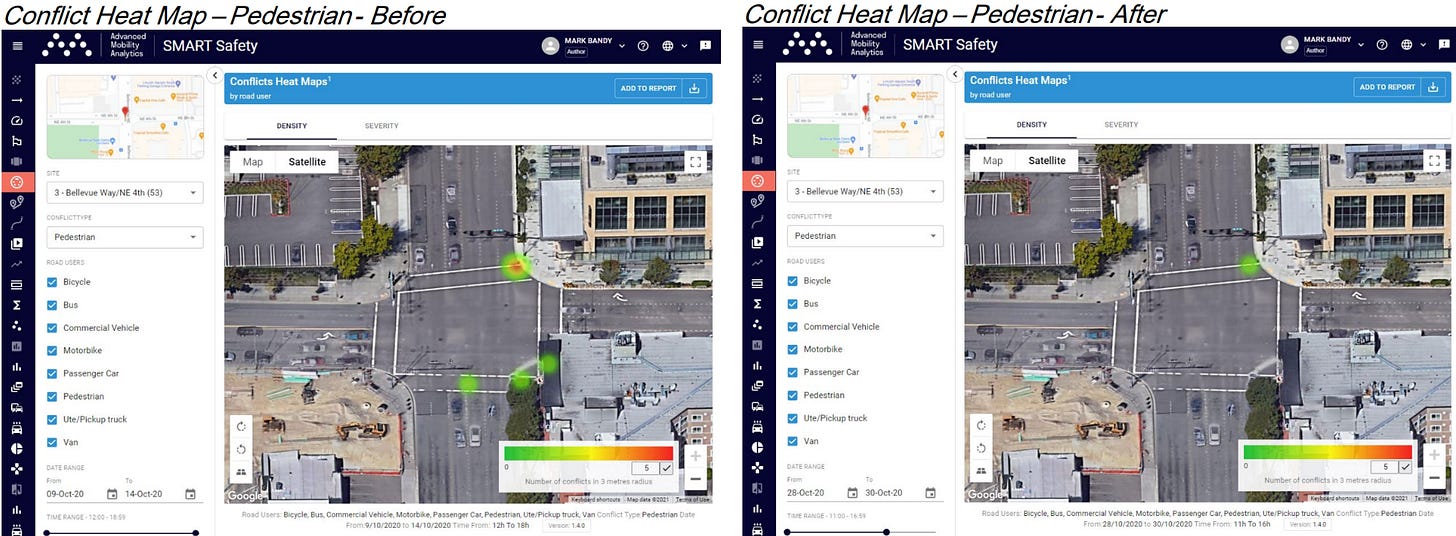

Video Analytics-Based Pedestrian Interval Evaluation in Bellevue

The City of Bellevue, Washington, conducted an evaluation of Leading Pedestrian Intervals (LPIs) using video analytics to enhance pedestrian safety at intersections[18]. In partnership with Microsoft, Jacobs, and the Advanced Mobility Analytics Group (AMAG), Bellevue analyzed before-and-after video data from 20 intersections equipped with traffic cameras. The data encompassed over 650,000 road users, and video analytics were employed to identify and assess conflicts[18].

The video data was processed by Microsoft's Edge Video Services (EVS) to classify and track different road users, such as pedestrians and vehicles. Then AMAG's SMART Transport Analytics Platform, powered by AI-driven video analytics, analyzed this data to identify potential conflicts by examining metrics like post-encroachment time (PET) and time-to-collision (TTC)[18]. The platform generated visualizations like Conflict Heat Maps and Speed and Trajectory Maps, along with Conflict Rate Data, which were then compared between the before-and-after LPI implementation periods to assess the effectiveness in improving safety.

LPIs provide pedestrians with a head start by displaying the walk signal several seconds before adjacent vehicular traffic receives a green light, thereby reducing conflicts between pedestrians and turning vehicles. The implementation of LPIs resulted in a 42.3% reduction in vehicle-pedestrian conflicts and a 21% reduction in vehicle-vehicle conflict rates[18]. These significant improvements led Bellevue to expand the use of LPIs throughout its downtown area starting in 2022.

Future Trends

Today, computer vision technology still carries certain risks, especially in the absence of comprehensive regulatory frameworks.

Data Accuracy: The accuracy of computer vision systems depends on the quality of the training data. If the input data is noisy, low-resolution, or incomplete, AI may make incorrect predictions.

Public Privacy and Ethical Challenges: Computer vision is widely used in surveillance and identity recognition, but excessive data collection may infringe on personal privacy. Without strict regulatory oversight, AI-driven surveillance systems may lead to mass data harvesting, unauthorized monitoring, and ethical dilemmas regarding consent and data security.

Algorithmic Bias and Fairness: AI systems are susceptible to inheriting and amplifying biases present in training data[21]. For example, if a computer vision model is predominantly trained on data from specific groups, it may lead to inaccurate recognition of other groups.

By addressing these challenges, researchers and policymakers can work towards developing more ethical, fair, and reliable computer vision systems that minimize bias, protect privacy, and enhance data accuracy.

Sources

[1] Wikipedia contributors. (n.d.). Computer vision. Wikipedia, The Free Encyclopedia. Retrieved March 13, 2025, from https://en.wikipedia.org/wiki/Computer_vision

[2] GeeksforGeeks. (n.d.). Computer vision. GeeksforGeeks. Jan 30, 2025, from https://www.geeksforgeeks.org/computer-vision/

[3] IBM. (n.d.). Computer vision. IBM Think. Retrieved March 13, 2025, from https://www.ibm.com/think/topics/computer-vision

[4] Kaggle. (n.d.). Computer vision. Kaggle. Retrieved March 13, 2025, from https://www.kaggle.com/learn/computer-vision

[5] Intel. (n.d.). What is computer vision? Intel. July 27, 2021, from https://www.intel.com/content/www/us/en/learn/what-is-computer-vision.html

[6] Lee, J., Kim, D., & Park, J. (2022). A machine learning and computer vision study of the environmental characteristics of streetscapes that affect pedestrian satisfaction. Sustainability, 14(9), 5730. https://doi.org/10.3390/su1409573

[7] Oluwafemidiakhoa. (2024, June 15)Guardians of the Crosswalk: AI and the Future of Pedestrian Safety. Kinomoto.Mag AI. https://medium.com/kinomoto-mag/guardians-of-the-crosswalk-ai-and-the-future-of-pedestrian-safety-b6bb57f2db31

[8] FlyPix AI. (n.d.). (2025, March 10). Best road damage detection tools for efficient maintenance. https://flypix.ai/blog/road-damage-detection-tools/

[9] Augmented A.I. (n.d.). (2023, May 25). Top 5 Object Tracking Methods: Enhancing Precision and Efficiency. https://www.augmentedstartups.com/blog/top-5-object-tracking-methods-enhancing-precision-and-efficiency

[10] Klingler, N. (2023, December 3). Object Tracking in Computer Vision. Viso.ai. https://viso.ai/deep-learning/object-tracking/

[11] Ropac, R., & DeFranco, J. F. (2025). Escape the traffic gridlock: Digital twins and the future of traffic management. IEEE Reliability Magazine, 2(1), 45–51. https://doi.org/10.1109/MRL.2025.3532206

[12] Aloupogianni, E., Doctor, F., Karyotis, C., Maniak, T., Tang, R., & Iqbal, R. (2024). An AI-based digital twin framework for intelligent traffic management in Singapore. 2024 International Conference on Electrical, Computer and Energy Technologies (ICECET), 1–6. https://doi.org/10.1109/ICECET61485.2024.10698642

[13] Smart Cities World. (n.d.). (2025, March 5). Barcelona pilots automated bus lane enforcement technology. https://www.smartcitiesworld.net/ai-and-machine-learning/barcelona-pilots-automated-bus-lane-enforcement-technology

[14] Kurrant News. (n.d.). (2025, February 20). Barcelona to reduce traffic congestion by 20% with AI-powered smart traffic lights. https://kurrant.com/kurrantly-news/barcelona-to-reduce-traffic-congestion-by-20-with-ai-powered-smart-traffic-lights/

[15] Flypix AI. (n.d.). (2025, February 10). Road damage detection using AI and deep learning. https://flypix.ai/blog/road-damage-detection/

[16] Maeda, H., Sekimoto, Y., & Seto, T. (2016). Lightweight road manager: Smartphone-based automatic determination of road damage status by deep neural network. In Proceedings of the 5th ACM SIGSPATIAL International Workshop on Mobile Geographic Information Systems (MobiGIS '16) (pp. 37–45). Association for Computing Machinery. https://doi.org/10.1145/3004725.3004729

[17] Arya, D., Maeda, H., Ghosh, S. K., Toshniwal, D., Mraz, A., Kashiyama, T., & Sekimoto, Y. (2021). Deep learning-based road damage detection and classification for multiple countries. Automation in Construction, 132, 103935. https://doi.org/10.1016/j.autcon.2021.103935

[18] Mark Bandy, PE and Erica Tran, PE. (2022). Evaluation of leading pedestrian intervals using video analytics. https://bellevuewa.gov/sites/default/files/media/pdf_document/2022/City-of-Bellevue-LPI-Video-Analytics-Summary-Evaluation-05082022-final.pdf

[19] Arun, A., Lyon, C., Sayed, T., Washington, S., Loewenherz, F., Akers, D., Ananthanarayanan, G., Shu, Y., Bandy, M., & Haque, M. M. (2023). Leading pedestrian intervals – Yay or nay? A before-after evaluation of multiple conflict types using an enhanced non-stationary framework integrating quantile regression into Bayesian hierarchical extreme value analysis. Accident Analysis & Prevention, 181, 106929.https://doi.org/10.1016/j.aap.2022.106929

[20] Office of the Victorian Information Commissioner. (n.d.). Artificial Intelligence and Privacy – Issues and Challenges. https://ovic.vic.gov.au/privacy/resources-for-organisations/artificial-intelligence-and-privacy-issues-and-challenges/

[21] Hardesty, L. (2018, February 11). Study finds gender and skin-type bias in commercial artificial-intelligence systems. MIT News. https://news.mit.edu/2018/study-finds-gender-skin-type-bias-artificial-intelligence-systems-0212