Text Messages to Dashboard for Public Reporting

Project Context and Goals:

For this project, I wanted to build something that could be conveniently used in the urban context and provide value for the public. For a different class, I had done a lot of field observations and analysis of detour infrastructure in Ann Arbor, and one of my conclusions for that project was that there were a lot of stray materials just laying around Ann Arbor. It made me wonder how the government could keep track of stuff like this. It didn’t make sense to me that the burden should be on the public to find the government agency or representative whose jurisdiction the leftover cones/signs would fall under and then report the issue to that agency or person. But, it also didn’t make sense for the government to manually check construction sites for cones and signage in wrong, distracting, or random places. So, I thought that it would make sense if there was a way for the public to easily report these inconveniences through a simple text message, which would be processed and sent to the department most relevant to the issue.

A solution such as this rightfully removes the burden from the public while also alerting government agencies of issues that they need to attend to. So, I made an application that processes text messages using natural language processing and then inputs the processed message into a dashboard categorized by the government department that should respond to. I will share more details below.

Methods:

This is the procedure that my program follows to take in a public incident text message and strategically place it in a dashboard:

User sends text message to Google Voice phone number

Google Voice automatically sends the message details to Gmail

Email message is filtered by sender information and labelled

Every minute, Google Apps Script function checks email label folder, keeps most relevant information and creates hash value, and places it in a Google Sheet

Python program pulls sheet data with Google Sheets API

Python program processes sheet data with ChatGPT API, determining place names, event keywords, urgency level, and relevant department. This determination is entirely prompt-based. Here is my prompt:

You are an assistant helping city officials classify incident reports submitted by the public.

Please extract the following information from the incident message below:

1. The most prominent place name within the message

2. The most prominent 1 or 2 keywords that best represent the incident the message is describing

3. The urgency with which the event should be responded to on a scale of 1-10

4. The City Department that is best suited to respond to the incident. Choose between Police, Fire Department, Sanitation, Public Works, and Social Services. If there is not a clear department that you think should respond, or you do not think the event is inordinary enough such that it would require a department to respond to it, just return an empty string.

Message: "{message}"

Respond in JSON format:

{{

"place": [...],

"keywords": [...],

"urgency": "...",

"department": "..."

}}Python program adds new columns with processed data back to the Google Sheet

Simultaneously, another Python program continuously checks the Google Sheet for new information and creates a Streamlit dashboard containing incident reports separated by department and sorted by urgency

All this happens pretty much continuously. The Google script checks the email label every minute, and the Streamlit dashboard refreshes every 20 seconds.

See this folder for the two .py files, the Google script, and the Google Sheet.

Outcomes:

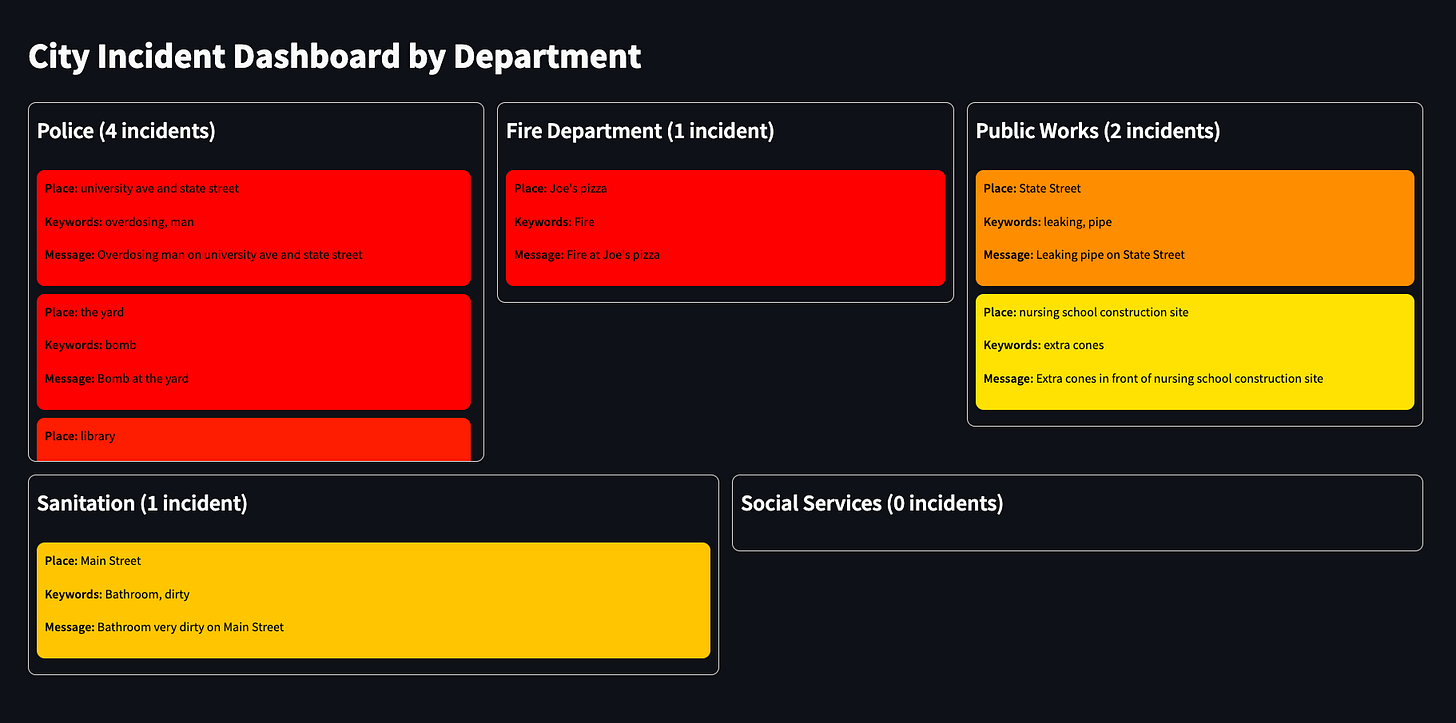

Here is a screenshot of the Streamlit dashboard with example text message incident reports:

Each department has their own panel containing incident reports sorted by urgency (red = high urgency, yellow = low urgency). Each incident report contains the place, the keywords, and the full message.

This is how the spreadsheet looks after it has been populated by the Google Script and then processed by ChatGPT API:

And here is a demo video to see how it works in real-time:

Reflections:

Firstly, this assignment helped me realize that coding is truly not reserved for only software engineers anymore. I leaned heavily on ChatGPT for the actual coding portions, which were all facilitated by my detailed prompt descriptions.

As I stated earlier, this project was driven by my studies in another class for my urban technology degree. I think being a UT student helped me develop a sense for what type of product might actually succeed in this context— I don’t know if there is any other method that has less friction for public reporting than just a simple text message. The whole idea was to make it easy for the public to alert the government of issues while ALSO making it easy for the government to take action on the incident. My implementation allows both of these to happen, and I don’t think I would have had the idea to do it this way if I was not aware of the troubles relating to government bureaucracy and also the difficulties urban citizens face when trying to get the government’s attention.

I am curious about whether this application could actually work well in a real-world environment. I think it would be quite difficult to protect against or filter through incident reports that do not really require government attention at the scale of a highly-populated city. I think the next step would be to create some sort of map of incidents— using NLP to detect place names in each message and then finding that place on a map and putting a pin down.

All in all, this project got me more excited to work on more urban-related products that create win-win situations for multiple stakeholders.